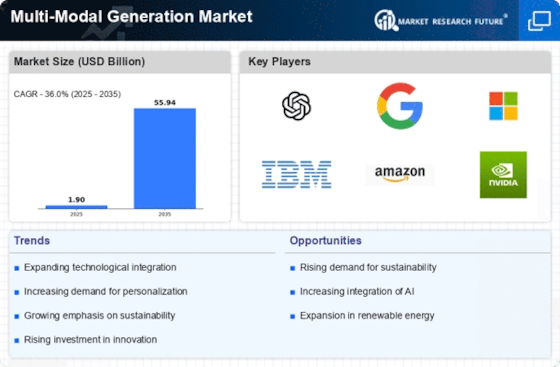

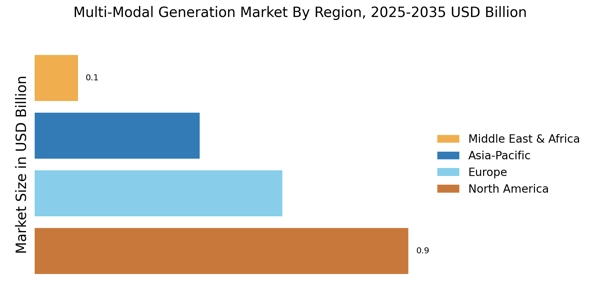

Leading market players are investing heavily in research and development in order to expand their product lines, which will help the Multi-Modal Generation Market grow even more. Market participants are also undertaking a variety of strategic activities to expand their footprint, with important market developments including new product launches, contractual agreements, mergers and acquisitions, higher investments, and collaboration with other organizations. To expand and survive in a more competitive and rising market climate, the Multi-Modal Generation industry must offer cost-effective items.

Manufacturing locally to minimize operational costs is one of the key business tactics used by manufacturers in the Multi-Modal Generation industry to benefit clients and increase the market sector. In recent years, the Multi-Modal Generation industry has offered some of the most significant advantages to organizations.

Major players in the Multi-Modal Generation Market, including Google, Microsoft, OpenAI, Meta, AWS, IBM, Tweleve Labs, Aimesoft, Jina AI, Uniphore, Reka AI, Runway, Vidrovr, Mobius Labs, Newsbridge, OpenStream.ai, Habana Labs, Modality.AI, Perceiv AI, Multi-modal, Neuraptic AI, Inworld AI, Aiberry, One AI, Beewant, Owlbot.AI, Hoppr, Archtype, Stability AI, and others, are attempting to increase market demand by investing in research and development operations.

Meta Platforms, Inc., doing business as Meta, was initially known as Facebook, Inc., and The Facebook, Inc. is a Menlo Park, California-based technological firm of American origin. In addition to other goods and services, the business owns and runs Facebook, Instagram, Threads, and WhatsApp. Connecting with Alphabet (Google), Apple, Amazon, and Microsoft as part of the Big Five, Meta is one of the major IT businesses in the United States.

In December Meta revealed its purpose to roll out multi-modal AI features that collect ambient data using the cameras and microphones on the business's smart glasses.

With the Ray-Ban smart glasses on, customers can say "Hey Meta" to bid a virtual assistant who can see and hear the events.

Reka AI was originated by DeepMind, Fair experts and Google Brain. Reka AI is at the frontline of technological innovation, generative models, creating creativity, and leading the mode in AI research. Universal inputs and outputs for multi-modal agents of general purpose. Proactive knowledge brokers who, without supervision, constantly better themselves and stay current. AI for all, irrespective of societal conventions, cultural background, or other factors. AI that is effective and efficient and that can be used at a reasonable cost.

In October Reka AI, Inc. debuted Yasa-1.

This multi-modal AI assistant goes beyond text comprehension to comprehend photos, brief movies, and audio clips. Yasa-1 gives businesses the ability to customize their features to private datasets with different modalities, allowing for the development of creative experiences for a range of use cases. This assistant can manage large contextual documents, run code, and provide contextually relevant responses that are gathered from the internet. It can support 20 languages.